Why Standard Bioequivalence Studies Fail for Highly Variable Drugs

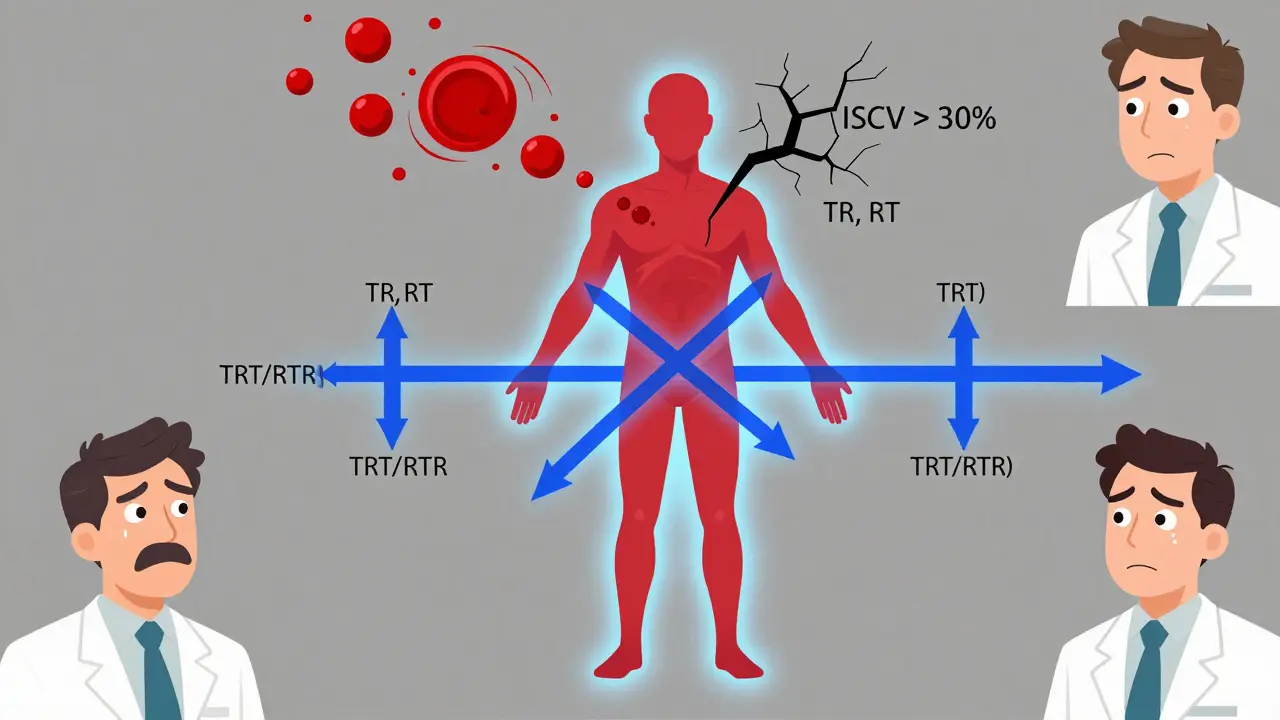

Imagine testing a drug that behaves differently in the same person from one day to the next. That’s the reality with highly variable drugs (HVDs)-medications like warfarin, levothyroxine, or certain antiepileptics where blood levels can swing wildly even when the same dose is taken under identical conditions. Standard two-period crossover studies (TR, RT) were never built for this. They assume variability is mostly between people, not within the same person. When within-subject coefficient of variation (ISCV) hits 30% or more, these designs become useless. You’d need 100+ volunteers just to have a shot at proving bioequivalence. Most sponsors can’t afford that. And even if they could, the chance of failing due to random noise is too high.

That’s where replicate study designs came in. They don’t just repeat the same test-they let you measure how much the drug fluctuates inside each individual. This isn’t theoretical. The FDA started requiring them in the early 2000s after real-world data showed standard designs were failing 60% of the time for HVDs. The European Medicines Agency followed suit in 2010. Today, if your drug’s ISCV is above 30%, you’re not even allowed to try a standard design. You must use a replicate method.

Three Types of Replicate Designs and When to Use Each

There are three main replicate designs, each with a different balance of data, cost, and complexity. The choice isn’t arbitrary-it’s dictated by the drug’s variability and regulatory expectations.

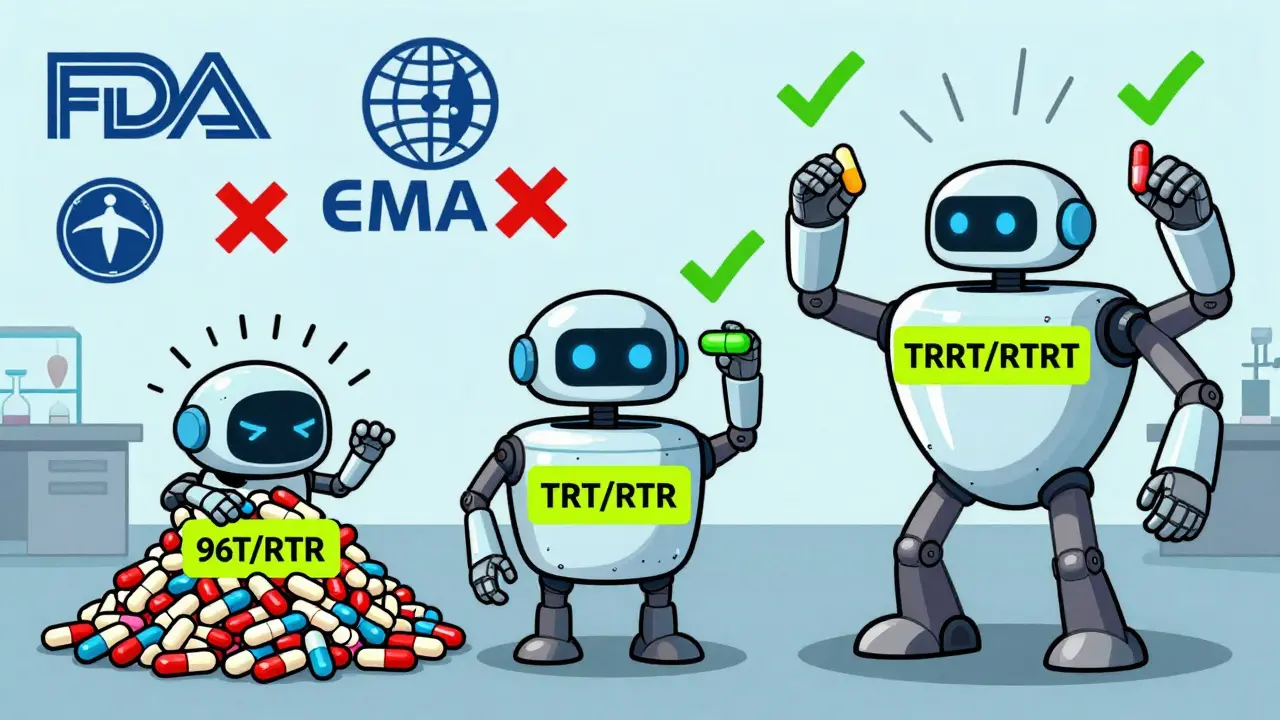

- Three-period full replicate (TRT/RTR): Subjects get the test drug twice and the reference drug twice, but in alternating sequences. This is the most popular design globally. It gives you both test and reference variability (CVwT and CVwR), which is critical for reference-scaled average bioequivalence (RSABE). The FDA and EMA both accept it. Industry surveys show 83% of CROs prefer this design for HVDs with ISCV between 30% and 50%. You need at least 24 subjects, with 12 completing the RTR sequence.

- Four-period full replicate (TRRT/RTRT): Each subject gets four treatments: test, reference, reference, test (or any permutation). This gives you the most precise estimate of both variabilities and is mandatory for narrow therapeutic index (NTI) drugs like warfarin. The FDA’s 2023 guidance on warfarin sodium specifically requires this design. It’s more expensive and takes longer, but when precision matters, there’s no alternative.

- Three-period partial replicate (TRR/RTR/RRT): Subjects only get the reference drug twice, never the test drug twice. This means you can only estimate reference variability (CVwR). It’s cheaper and faster than full replicate designs. The FDA accepts it for RSABE, but the EMA does not. It’s a good option if you’re targeting the U.S. market and your drug’s variability is moderate (ISCV 30-45%), but it’s risky for global submissions.

Here’s the rule of thumb: if ISCV is under 30%, stick with a standard two-period design. Between 30% and 50%, go with TRT/RTR. Above 50%, or if it’s an NTI drug, use TRRT/RTRT. Don’t guess-use historical data or pilot studies to estimate variability before you lock in the design.

How Replicate Designs Cut Costs and Improve Success Rates

Let’s say you’re testing a drug with an ISCV of 45%. A standard two-period crossover would need about 96 subjects to reach 80% power. That’s a $1.2 million study, minimum. With a three-period full replicate design (TRT/RTR), you need only 28 subjects. That’s a 71% reduction in participants. The cost drops to around $400,000. And the approval rate? Studies using replicate designs have a 79% success rate for HVDs, compared to just 52% for non-replicate attempts.

Why? Because replicate designs let regulators scale the bioequivalence limits. Instead of forcing a 80-125% range-which is too tight for a drug that naturally swings 50% in one person-they widen the window based on how variable the reference product is. If the reference has high variability, the acceptable range for the test drug expands too. This isn’t lowering the bar-it’s adjusting it to match reality. A drug that’s 90% bioavailable in one person and 110% in another is still the same drug. The replicate design proves it.

Real-world proof? A clinical operations manager posted on the BEBAC forum in October 2023: their levothyroxine study with 42 subjects using TRT/RTR passed on the first submission. Their previous attempt with 98 subjects using a standard design failed twice. That’s not luck. That’s science.

The Hidden Costs: Dropout, Duration, and Statistical Complexity

Replicate designs aren’t magic. They come with trade-offs. The biggest one? Subject dropout. In a four-period study, you’re asking volunteers to come in four times over several weeks. For drugs with long half-lives, washout periods can stretch to 14 days or more. The average dropout rate is 15-25%. That means you have to recruit 20-30% more people than you think you need. One company on Reddit reported a 30% dropout in a long-half-life drug study, which added $187,000 to the budget and pushed the timeline out by eight weeks.

Then there’s the statistical side. You can’t just plug data into Excel. You need mixed-effects models, reference-scaling algorithms, and specialized software. Phoenix WinNonlin and the R package replicateBE (version 0.12.1) are the industry standards. Learning how to use them takes 80-120 hours of training. Many CROs hire external statisticians just to run the analysis. A 2022 AAPS workshop found that 62% of sponsors underestimated the time and expertise needed for analysis.

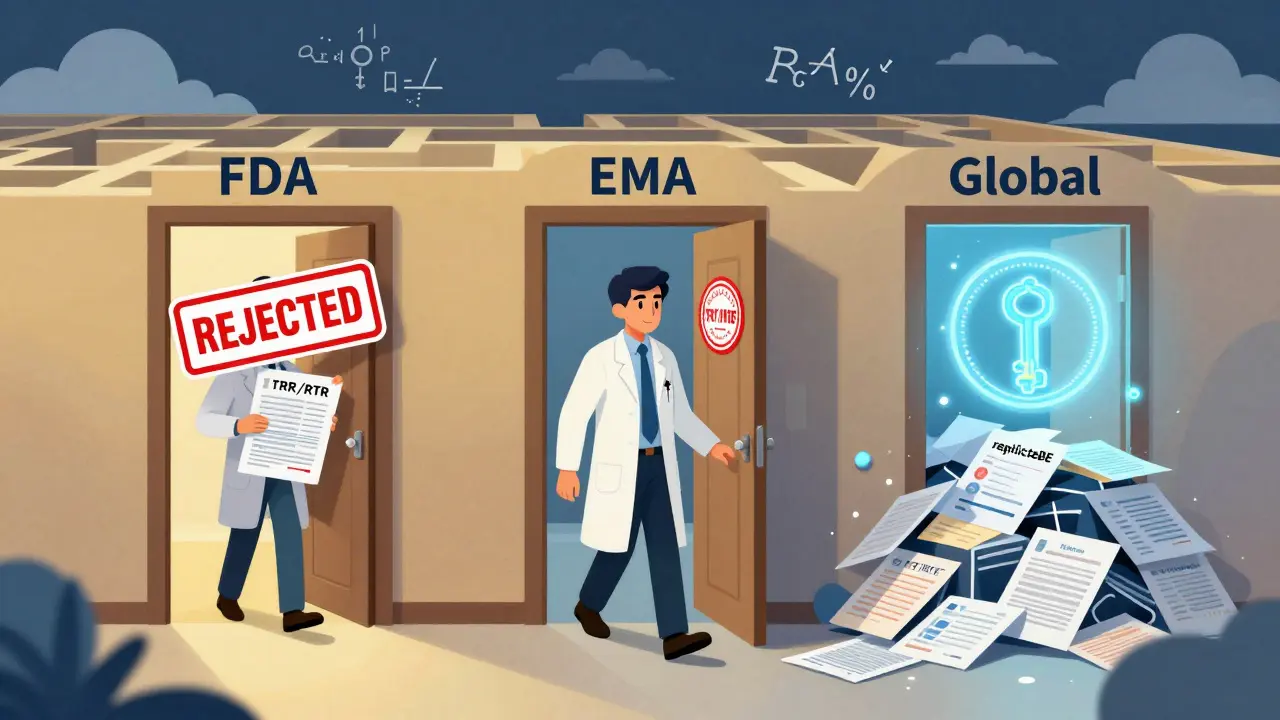

And don’t forget the regulatory minefield. The FDA and EMA have different rules. The EMA rejects partial replicate designs. The FDA requires at least 12 subjects in the RTR arm of a three-period study. Submit a four-period design to the EMA and they might question why you didn’t use the simpler three-period option. Cross-agency data shows 23% higher rejection rates for studies that follow one regulator’s design when submitting to another.

What the Industry Is Doing Differently in 2026

The field is evolving fast. In 2023, 68% of HVD bioequivalence studies used replicate designs. By 2026, that number is expected to hit 80%. The global market for these studies is growing at 15.3% per year. WuXi AppTec, PPD, and Charles River now dominate the space, but niche players like BioPharma Services are winning contracts by specializing in statistical design and regulatory strategy.

New trends are emerging. The FDA’s 2022 draft guidance on adaptive designs allows you to start with a replicate study but switch to a standard analysis if early data shows low variability. That’s a smart way to hedge your bets. Pfizer’s 2023 proof-of-concept study used machine learning to predict sample size needs with 89% accuracy-using historical BE data as input. It’s not science fiction anymore.

Regulatory harmonization is coming. The ICH is working on an update to E14/S6(R1) expected in Q3 2024. It’s likely to standardize RSABE methods across the U.S., EU, and Japan. But until then, you still need to tailor your design to your target market. Don’t assume what works in the U.S. will fly in Europe.

How to Get Started Right

If you’re planning your first replicate study, here’s how to avoid the common pitfalls:

- Estimate ISCV early: Use pilot data, literature, or historical BE studies. Don’t guess. If you’re unsure, run a small pilot with 12-16 subjects using a TRT/RTR design.

- Choose the right design: ISCV < 30%? Use 2x2. 30-50%? Use TRT/RTR. >50% or NTI? Use TRRT/RTRT.

- Over-recruit: Plan for 25% dropout. If you need 24 subjects, enroll 30.

- Use validated software: Install replicateBE or WinNonlin. Don’t try to code your own RSABE algorithm.

- Consult regulators early: Submit a pre-submission meeting request to the FDA or EMA. Ask: “Is this design acceptable?” Get it in writing.

There’s no shortcut. But if you do it right, you’ll save time, money, and frustration. And you’ll get your generic drug approved-not after three failed attempts, but on the first try.

What Happens If You Ignore Replicate Designs?

The FDA rejected 41% of HVD bioequivalence submissions in 2023 that didn’t use replicate designs. That’s not a typo. Nearly half of all attempts were turned down because they used the wrong method. The EMA’s rejection rate for similar submissions was 37%. You’re not just risking delay-you’re risking total failure. And with the global market for generic HVDs projected to hit $18 billion by 2028, missing this step isn’t just costly. It’s career-limiting.

What is the minimum number of subjects required for a three-period full replicate bioequivalence study?

The minimum is 24 subjects, with at least 12 completing the RTR sequence. This requirement comes from the EMA’s 2010 bioequivalence guideline and is widely accepted by the FDA for three-period full replicate designs (TRT/RTR). While some studies may succeed with fewer subjects if variability is low, 24 is the standard baseline to ensure statistical power and regulatory acceptance.

Can I use a partial replicate design for a submission to the European Medicines Agency (EMA)?

No. The EMA does not accept partial replicate designs (TRR/RTR/RRT) for reference-scaled bioequivalence. They require full replicate designs (TRT/RTR or TRRT/RTRT) to estimate within-subject variability for both the test and reference products. Using a partial design for an EMA submission will result in immediate rejection. If you’re targeting the EU market, always use a full replicate design.

Which software is used to analyze replicate study data for bioequivalence?

The industry standard is the R package replicateBE (version 0.12.1 or later), which is freely available on CRAN and specifically designed for reference-scaled average bioequivalence (RSABE). Phoenix WinNonlin is also widely used, especially in regulated environments where commercial software is preferred. Both tools implement FDA and EMA-approved algorithms for mixed-effects modeling and variability scaling. Avoid generic statistical software like SPSS or SAS unless you’ve validated your own code against these established packages.

When is a four-period full replicate design mandatory?

A four-period full replicate design (TRRT/RTRT) is mandatory for narrow therapeutic index (NTI) drugs, such as warfarin, levothyroxine, and phenytoin. The FDA explicitly requires this design in its 2019 and 2023 guidances because precise estimation of both test and reference variability is critical to patient safety. Even if the drug’s variability is moderate, NTI status overrides all other considerations. Always default to a four-period design if your drug is classified as NTI.

How does reference-scaling work in replicate designs?

Reference-scaling adjusts the bioequivalence acceptance range based on the within-subject variability of the reference product. If the reference drug has high variability (ISCV > 30%), the acceptable range for the test drug widens beyond the standard 80-125%. For example, with an ISCV of 40%, the range might expand to 70-143%. This prevents unfairly rejecting a bioequivalent product just because the reference itself fluctuates. The scaling formula is defined in FDA and EMA guidelines and must be applied using validated software-it’s not a simple calculation you can do by hand.

What’s the biggest mistake sponsors make with replicate designs?

The biggest mistake is underestimating subject dropout and not over-recruiting. Many sponsors plan for exactly the minimum number of subjects needed, assuming everyone will complete all periods. In reality, 15-25% drop out due to side effects, scheduling conflicts, or personal reasons. Without a buffer, you risk having insufficient data to complete the analysis. Always recruit 20-30% more than your target. Another common error is using the wrong statistical model-like applying a standard ANOVA instead of a mixed-effects model-which leads to incorrect variability estimates and regulatory rejection.

6 Comments

Blair Kelly

January 31 2026

Your entire post is technically accurate, but you missed one critical point: the statistical models used in replicate designs are not robust to non-normality or missing data patterns. The replicateBE package, while convenient, assumes homoscedasticity and complete data-conditions rarely met in real-world clinical trials. If your residual variance is heteroskedastic, your RSABE confidence intervals are invalid. This is not a minor oversight-it’s a regulatory liability. You need to validate your model diagnostics before submission. Period.

Rohit Kumar

February 1 2026

In India, we see this differently. We don’t have the luxury of $400,000 studies. Many of us use partial replicates anyway, because the alternative is no drug at all. The EMA’s rigidity ignores the reality of global access. Science should serve humanity, not regulatory purity. If a drug works safely in 95% of patients, why should we delay its availability because a statistician in Brussels says the variance isn’t estimated ‘correctly’? We’re not playing chess-we’re saving lives.

Lily Steele

February 1 2026

This is so helpful. I just started working on my first HVD project and was terrified of messing up the design. The dropout tip? Game changer. We’re enrolling 30 instead of 24. Also, the software advice saved me-my boss wanted to use SAS. Nope. replicateBE it is. Thanks for making this feel less intimidating.

Jodi Olson

February 2 2026

The idea that variability within a single person should dictate regulatory thresholds is philosophically profound. It suggests that identity itself-biological consistency-is not absolute. If a drug behaves differently in the same body across time, then bioequivalence isn’t about chemical sameness-it’s about functional resilience. That’s not just pharmacology. It’s epistemology.

calanha nevin

February 3 2026

For anyone new to this: if you're submitting to the FDA and using a three-period design, make sure at least 12 subjects complete the RTR sequence. This isn't optional. I’ve reviewed 17 submissions in the last year. Eight were rejected outright because the sponsor assumed '24 total subjects' meant '24 in each sequence.' It doesn't. The RTR arm must have 12 completers. Always double-check the EMA and FDA guidance side by side. One typo in your statistical analysis plan and your entire program gets shelved.

Beth Cooper

January 31 2026

I've seen this before. The FDA's 'replicate design' push? Total corporate scam. Big Pharma wants you to spend $400K on a study so they can keep charging $500 for a pill that's basically sugar. They're not protecting patients-they're protecting profits. And don't get me started on 'reference-scaling'-that's just fancy math to let bad generics slip through. I've got sources. I know what's really going on.